既 TS-932X 之後又發表了以 AMD x86 CPU 設計的 TS-963X NAS, 立馬訂了一台, 誰知訂購沒有多久, QNAP 又發表了 TS-951X Intel x86 CPU NAS, 同樣的價格也只比 TS-963X 高一些. 永遠都追不上廠商新機發表的速度

有關這三台 9 bay NAS 規格上的差異, 可以直接參考官網. (官網: 威聯通 9-bay NAS 完備)

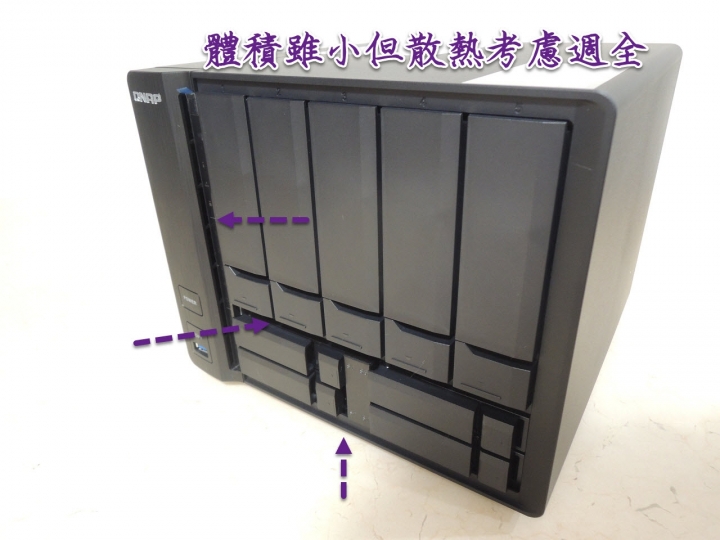

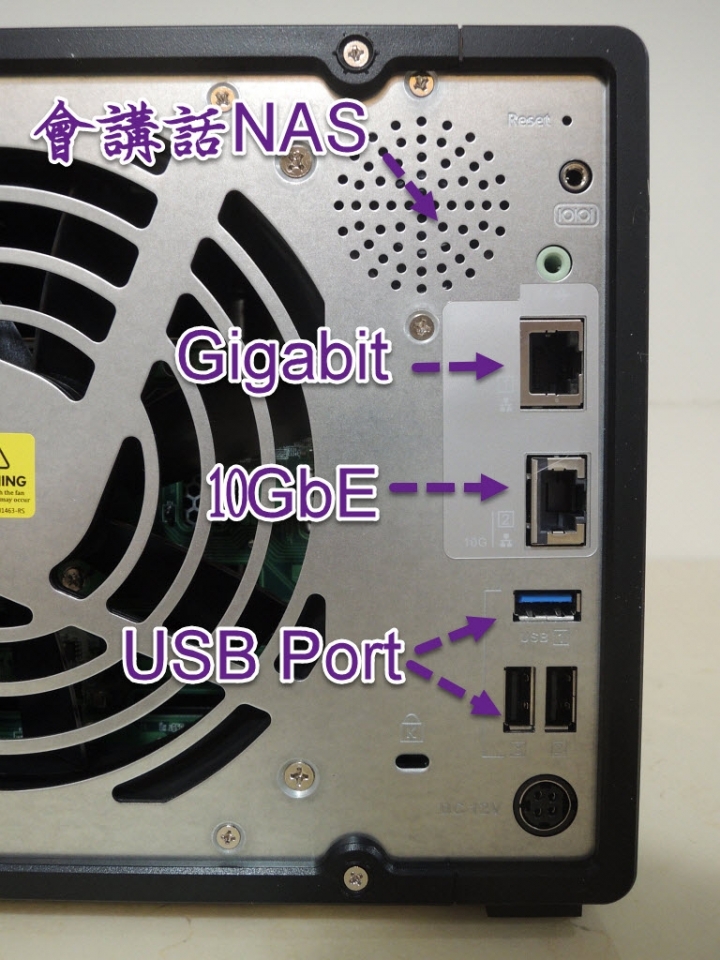

這次開箱的是 TS-963X AMD based 10GbE NAS.

| TS-963X 回'家' |

| 效能簡測 |

先簡單的測了一下這台 10GbE NAS 的讀寫效能.

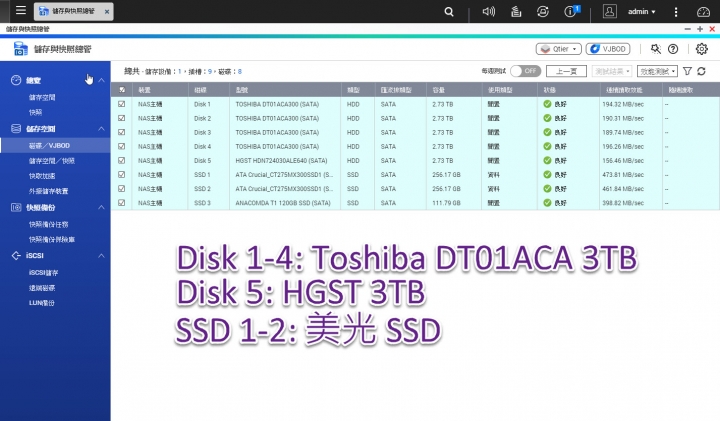

測試的硬碟及 SSD 為美光 250GB SSD, 以及 Toshiba DT01ACA300 3TB 桌機碟.

硬碟單顆的傳輸效能約 190MB/s, 其中第五顆是用 HGST 3TB NAS 碟, 讀取的效能約 150MB/s,

美光 SSD 在此台 NAS 的讀取效能約 460MB/s 左右.

以美光 SSD *2 建立 RAID 0, 預期大約可達 1000Mbps 的讀寫效能. 以 fio 測試內部讀寫效能, 如下大約可以解讀為

read 大約在 900MB/s 以上, 而 write 大約在 700MB/s 以上. 寫入的效能比預期低了些.

[admin@NAS28ABD6 Multimedia]# fio fio.conf

read: (g=0): rw=read, bs=64K-64K/64K-64K/64K-64K, ioengine=libaio, iodepth=16

write: (g=1): rw=write, bs=64K-64K/64K-64K/64K-64K, ioengine=libaio, iodepth=16

fio-2.2.10

Starting 2 processes

Jobs: 1 (f=1): [_(1),W(1)] [100.0% done] [0KB/670.1MB/0KB /s] [0/10.8K/0 iops] [eta 00m:00s]

read: (groupid=0, jobs=1): err= 0: pid=455: Tue Jun 5 03:28:26 2018

read : io=16384MB, bw=965651KB/s, iops=15088, runt= 17374msec

slat (usec): min=31, max=1088, avg=36.37, stdev= 4.75

clat (usec): min=140, max=8634, avg=1020.04, stdev=428.44

lat (usec): min=184, max=8676, avg=1057.10, stdev=428.43

clat percentiles (usec):

| 1.00th=[ 221], 5.00th=[ 350], 10.00th=[ 466], 20.00th=[ 612],

| 30.00th=[ 756], 40.00th=[ 892], 50.00th=[ 1012], 60.00th=[ 1144],

| 70.00th=[ 1272], 80.00th=[ 1416], 90.00th=[ 1576], 95.00th=[ 1704],

| 99.00th=[ 1864], 99.50th=[ 1912], 99.90th=[ 2008], 99.95th=[ 2672],

| 99.99th=[ 7648]

bw (KB /s): min=937600, max=973184, per=100.00%, avg=965620.71, stdev=6593.75

lat (usec) : 250=1.89%, 500=10.83%, 750=16.83%, 1000=19.23%

lat (msec) : 2=51.11%, 4=0.07%, 10=0.03%

cpu : usr=10.89%, sys=59.37%, ctx=159199, majf=0, minf=266

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=100.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.1%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=262144/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=16

write: (groupid=1, jobs=1): err= 0: pid=982: Tue Jun 5 03:28:26 2018

write: io=16384MB, bw=772041KB/s, iops=12063, runt= 21731msec

slat (usec): min=25, max=3551, avg=40.33, stdev= 9.37

clat (usec): min=150, max=158904, avg=1246.84, stdev=1485.86

lat (usec): min=190, max=158943, avg=1287.91, stdev=1485.89

clat percentiles (usec):

| 1.00th=[ 652], 5.00th=[ 964], 10.00th=[ 972], 20.00th=[ 1048],

| 30.00th=[ 1128], 40.00th=[ 1192], 50.00th=[ 1208], 60.00th=[ 1272],

| 70.00th=[ 1304], 80.00th=[ 1368], 90.00th=[ 1448], 95.00th=[ 1464],

| 99.00th=[ 1864], 99.50th=[ 3536], 99.90th=[ 4896], 99.95th=[ 5152],

| 99.99th=[95744]

bw (KB /s): min=542208, max=793728, per=99.99%, avg=771976.93, stdev=49383.89

lat (usec) : 250=0.23%, 500=0.42%, 750=0.64%, 1000=11.19%

lat (msec) : 2=86.70%, 4=0.53%, 10=0.26%, 50=0.01%, 100=0.01%

lat (msec) : 250=0.01%

cpu : usr=47.23%, sys=50.48%, ctx=1421, majf=0, minf=10

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=100.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.1%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=0/w=262144/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=16

Run status group 0 (all jobs):

READ: io=16384MB, aggrb=965650KB/s, minb=965650KB/s, maxb=965650KB/s, mint=17374msec, maxt=17374msec

Run status group 1 (all jobs):

WRITE: io=16384MB, aggrb=772040KB/s, minb=772040KB/s, maxb=772040KB/s, mint=21731msec, maxt=21731msec

Disk stats (read/write):

dm-7: ios=262144/260582, merge=0/0, ticks=267386/113937, in_queue=381780, util=99.24%, aggrios=262144/262164, aggrmerge=0/0, aggrticks=267049/114216, aggrin_queue=381489, aggrutil=99.17%

dm-6: ios=262144/262164, merge=0/0, ticks=267049/114216, in_queue=381489, util=99.17%, aggrios=262144/262164, aggrmerge=0/0, aggrticks=264995/112075, aggrin_queue=377557, aggrutil=99.16%

dm-4: ios=262144/262164, merge=0/0, ticks=264995/112075, in_queue=377557, util=99.16%, aggrios=65536/65543, aggrmerge=0/0, aggrticks=66158/27924, aggrin_queue=94184, aggrutil=99.18%

dm-0: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%, aggrios=0/0, aggrmerge=0/0, aggrticks=0/0, aggrin_queue=0, aggrutil=0.00%

drbd1: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

dm-1: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

dm-2: ios=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

dm-3: ios=262144/262172, merge=0/0, ticks=264633/111699, in_queue=376738, util=99.18%

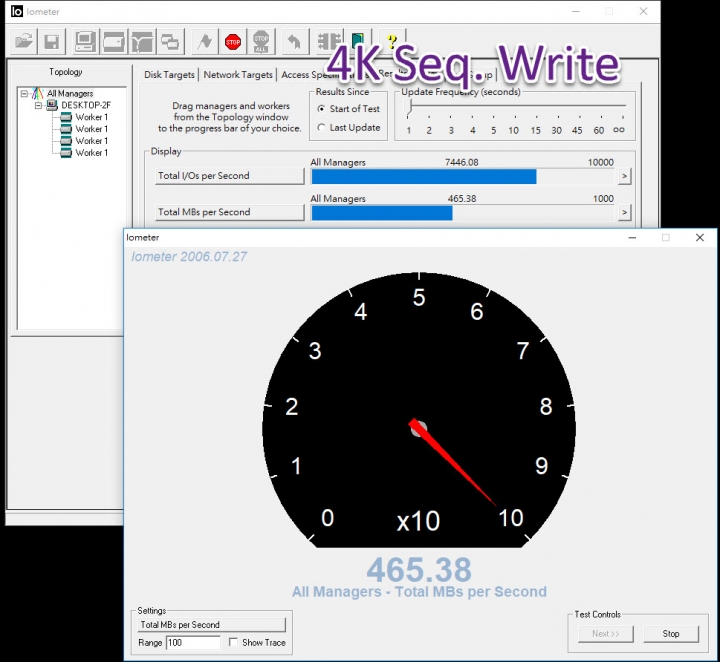

接著以 iometer 測試 4k seq. read & write 效能, Client 採用 Windows 10 PC (intel i5 cpu), PC & NAS 中間透過 QNAP QSW-1208-8C 10G switch.

這部份看的出來比前面 fio 測試的數據更低. 自己的解讀是, 整個傳輸的瓶頸是在 10GbE 的網路上, NAS 本身內部的磁碟 i/o 雖然可達 900MB/s & 700MB/s 以上, 但經 10GbE 網路傳輸後降低了非常多.

當然這只是自己實測的結果, 建議網友也可參考官網上的數據.

如果和 TS-932X 相較, 自己猜測會不會 TS-932x 的讀寫效能比 TS-963X 好呢?